ExLlamaV2 is a fast inference library that enables the running of large language models (LLMs) locally on modern consumer-grade GPUs. It is designed to improve performance compared to its predecessor, offering a cleaner and more versatile codebase.

https://github.com/turboderp/exllamav2

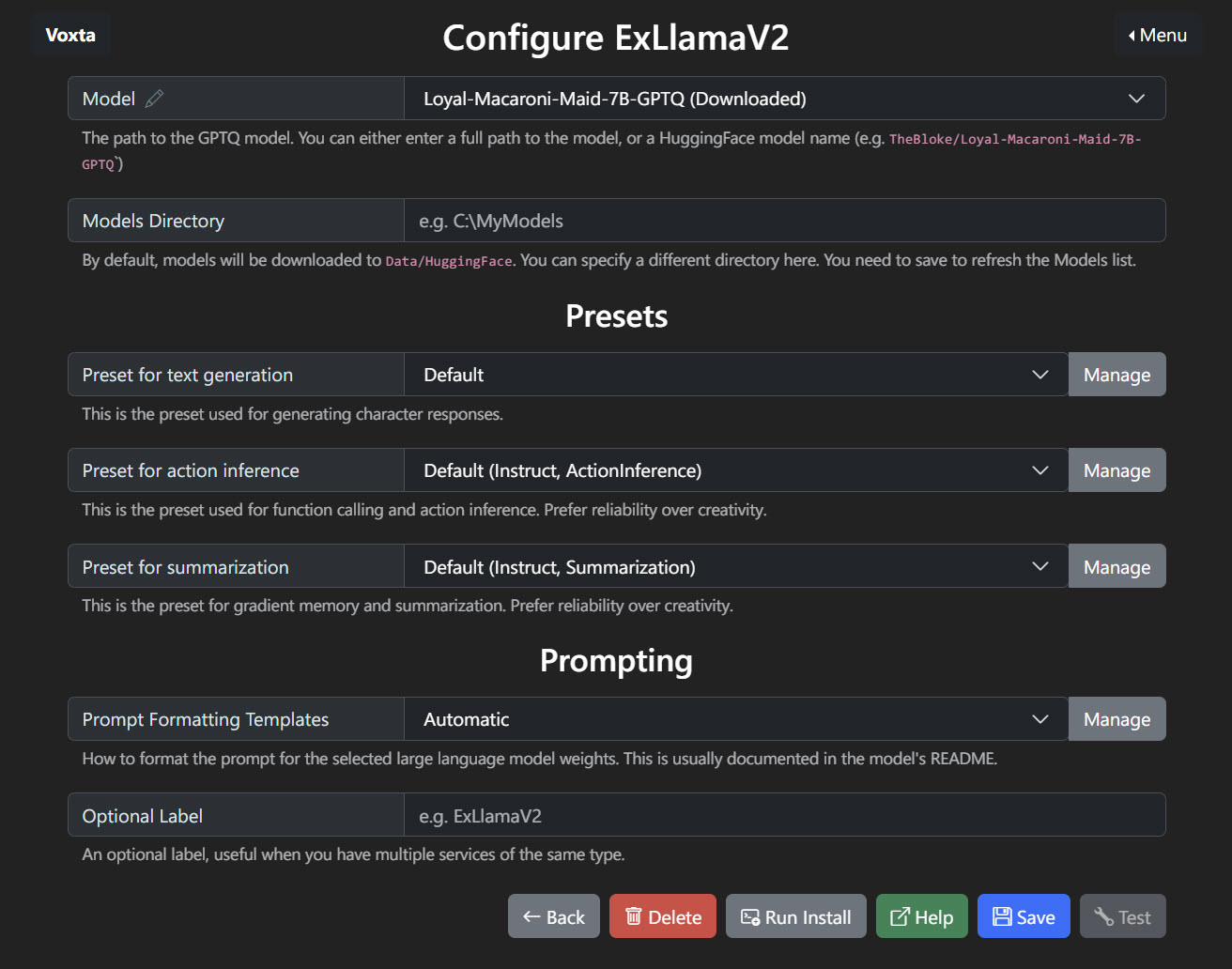

Model: This setting allows you to select the LLM that ExLlamaV2 will use. You can choose a specific model from a list or specify a model by its path or name from HuggingFace. For example, “Loyal-Macaroni-Maid-7B-GPTQ” is shown as the selected model in the dropdown menu.

Models Directory: Here you can define the directory where your models are stored or will be downloaded to. By default, models download to the ‘Data/HuggingFace’ directory, but a custom path can be set, such as ‘C:\MyModels’.

Presets:

- Preset for text generation: Defines the default setting for generating character responses.

- Preset for action inference: Controls the default setting for function calling and action inference, prioritizing reliability over creativity.

- Preset for summarization: Sets the default for gradient memory and summarization tasks, also favoring reliability.

Prompting:

- Prompt Formatting Templates: Specifies how prompts for the LLM should be formatted. The setting can be automatic, or a specific formatting template can be chosen.

Optional Label: A field to assign a custom label to this specific configuration of ExLlamaV2 for easier identification, especially when using multiple instances.